4. A MODEL OF THE INITIATIVE - COORDINATION TRADE-OFF

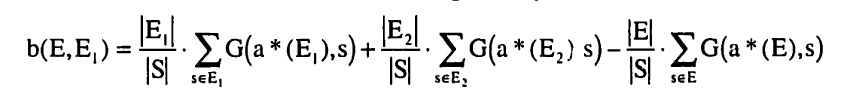

4.1 Basic Setup

The model considers two risk-neutral units (j = A, B; superscript). These units can be thought of as entrepreneurs allocating their effort or as businesses allocating their resources (effort will be used to mean both, unless otherwise noted)13. The units must choose between two tasks (i = 1,2; subscript). The effort allocations are written as vectors

eA = (e1Ae2A) and eD = (elB/e2B)

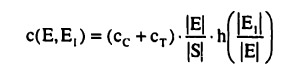

The cost of effort is assumed to be convex and for simplicity is given by

c(ej) = l/2.(e1j + e2j- E0)2 for j = A,B

where E0 is a minimal effort level. In the resource interpretation, E0 might be a fixed capacity below which cost is constant.

The purpose of exerting effort and allocating it to the tasks is to obtain two types of benefits

- Benefits from Initiative

The benefits from initiative depend on the level of effort in the tasks and are denoted by pij with the vector notation pj = (e1j, e2j) for j = A, B. The total benefit from initiative is given by

R1 = R1A + R1B = pA • eA + pB • eB

- Benefits from Coordination

The benefits from coordination depend on the allocation of efforts to tasks. A rather extreme form of coordination benefits is assumed: coordination benefits arise only when both units exert their entire effort on the same task. This assumption ensures that benefits from coordination and benefits from initiative can be easily separated in the interpretation of the results. The coordination benefits are denoted by m = {m1, m2) and the total coordination benefit is given by

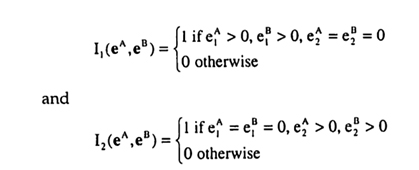

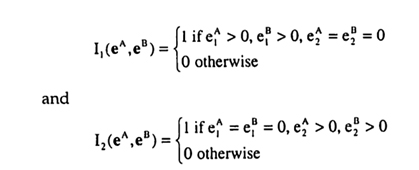

Rc=m.I(eA,eB) where

14 The basic setup is a one-shot setting. See Section 4.5 for a discussion of the effects of repetition. The total benefit is then simply the sum of the benefit from initiative and the benefit from coordination:

R = R1 + Rc

It depends on the benefit parameters pA, pB and m and on the effort choices eA and eB.

Some of the benefits are realizations of random variables. To simplify the analysis, it is assumed that the benefits related to task 2, i.e. p2A, p2B, and m2 are fixed. Only the benefits related to task 1 are uncertain, as follows:

- the p1j are assumed to be distributed uniformly over [Lj, Hj] for j = A, B throughout

- various distributions for m, will be considered, but generally it will be assumed to be distributed uniformly over [LM, HM]

Unless stated otherwise, it will be assumed that Lj < p2j < Hj and similarly that LM < m2 < HM. All realizations are assumed to be independent of each other and across time.

4.2 Overview of the Analysis

This basic setup will be analyzed for a number of different assumptions

about incentives and information. It functions much like a laboratory

in which it is possible to conduct a variety of experiments. For incentives,

two fundamental scenarios will be distinguished. In the team theory scenario,

it is assumed that agents' incentives are aligned so that they will maximize

the total benefit. In contrast, in the incentive scenario, it is assumed

that agents maximize their individual benefits. The team theory scenario

will be analyzed first and will provide a benchmark for comparison. 14

14 To the best of my knowledge there are no other papers that analyze several substantially different IT settings from both a team-theory and an incentive perspective.

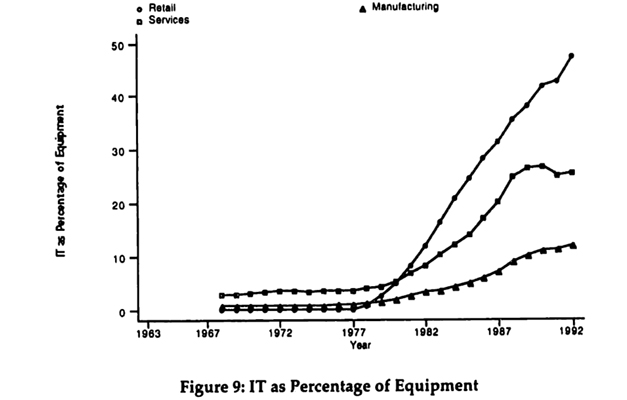

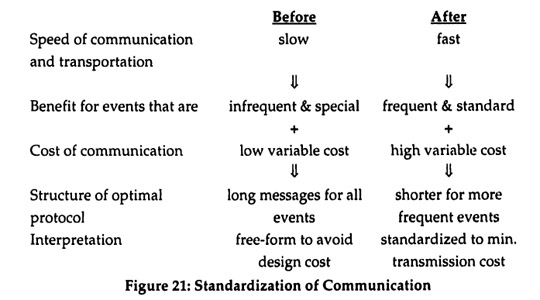

Throughout, it will be assumed that the benefits from initiative are observed locally in the units, i.e. unit A observes pA and unit B observes pB. The benefits from coordination, m, are generally assumed to be observed by a central unit. Occasionally, it will also be discussed how the results would differ if the benefits from coordination were observed locally (e.g. if unit A observed and unit B observed m2). All observations occur before the units need to choose their effort levels and are realizations of random variables, as was described above. The availability and usage of IT determines how much of this information can be communicated between the units before efforts need to be chosen. Three settings will be distinguished, which coarsely reflect the evolution of IT, where IT is interpreted broadly to include both computation and communication.

- No IT

Without IT, communication is prohibitively slow and costly and hence the units must base their effort choices solely on their local observations of realizations and on the knowledge of the distributions of the variables. 15

- Centralized IT

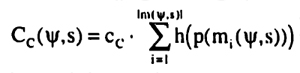

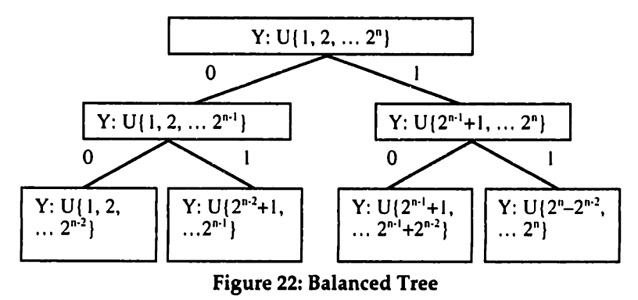

In the centralized IT setting, the local units can communicate the realizations pA and pB of the benefits from initiative to the center. The center thus has access to all information, including the realization m of the benefits from coordination. But the local units have limited computational power. Therefore, instead of being able to send all the information to the local units and letting them compute the optimal decision, the center must carry out the computation. The essence of such central computations is that they take a large number of inputs and reduce them to a few outputs. In order to avoid modeling difficulties, the output is assumed to be restricted to a single bit.16 This should not be seen as an asymmetry in the communication channel, but rather be compared to the information available to the respective sender: the local units send most (here: all) of their information, whereas the center carries out a computation and sends only the result.

15 This does not imply that communication is impossible; it is merely too slow and costly for the type of repeated observation and reaction considered here.

16 If the output were a real number, the center could potentially give highly sophisticated commands, which would be difficult to model. This problem is closely related to the information coding problem discussed in a separate paper (Wenger 1997a).

- Networked IT

Finally, with networked IT it is possible to share all observations. The units A and B can thus base their choice of efforts on a complete knowledge of all the benefits pA, pB, and m. The assumption here is that not only do high-speed networks make it possible to communicate all information, but there is also enough local computing power available to make use of it.

These assumptions about IT express capabilities only.17 Whether or not the units actually use these capabilities to truthfully report their observations is a separate issue. Truthtelling will be assumed for the team theory scenario but not for the incentive scenario.

4.3 Team Theory Scenario

4.3.1 Measures for Comparison

In order to have a unified way for comparing the results in the different settings and scenarios, it will be useful to introduce the following two measures:

- Degree of Coordination

Coordination will be said to occur when the units deviate from their locally optimal choice in order to obtain the coordination benefit.18 With perfect coordination the units only deviate when doing so is in fact optimal. There are three possible errors: deviation when it is not optimal (over-coordination); no deviation when it would be optimal (under-coordination); and wrong deviation (mis-coordination).19 The degree of coordination will therefore be the percentage of realizations of p1^, p1A and ml for which no coordination errors are made.

- Degree of Initiative

Initiative is the effort undertaken by the units. In order to separate its measurement from the measurement of coordination, the effort will be considered conditional on the choice of tasks. This is easiest by considering task 2 only, since for task 2 the benefit from initiative is fixed by assumption. The degree of initiative will therefore be the percentage of effort that is expended relative to the social optimum when task 2 is chosen.

17 The approach taken here is different from Malone and Wyner (1996). Their paper represents three organizational forms by reduced expressions that include both the cost and benefits from IT. The current paper, however, changes only the IT setting and treats the organizational form as endogenous.

18 This may appear to be an awkward definition, but its utility in measuring coordination will become apparent during the analysis.

19 For an example of mis-coordination consider the following: the locally optimal choices are task 2 for unit A and task 1 for unit 13; taking coordination into account, it would be optimal for both to choose task 2, yet they wind up both choosing task 1. They do coordinate, i.e. unit A deviates from the locally optimal task, but unit B should have been the one to deviate.

Both measures range between 0 and 100%. The rationale behind the particular choices of measures will become clearer as they are used below to compare various settings in the different scenarios.

4.3.2 Networked IT (Team Theory Scenario)

The Networked IT setting is the easiest to analyze since here all information can be communicated. With costless communication, it is always optimal in a team theory setting to actually communicate all the information (Marschak and Radner 1972). The team members can then each solve the overall optimization problem and locally make their choices according to this socially optimal solution. The Networked IT setting in the team theory scenario also provides a natural base for comparison. All other situations involve some type of constraint on the available information, the incentives, or both.

The decision rule for the Networked IT setting can be derived as follows. Given the assumptions, it is never optimal for a local unit (A or B) to split its effort between the two tasks. Suppose splitting were optimal. This would result in

Rc = m • I(eA eB) = (m1, m2)' • (0,0) = 0

But with no benefit from coordination, the benefit from initiative can be maximized freely (there can be no loss of coordination benefit). Since the benefit from initiative is linear by assumption, it is optimal for each unit to allocate all effort to the task with the higher benefit. Hence, splitting could not have been optimal.

With splitting ruled out, the decision rule consists of comparing the total benefit from four different possible optimizations (depending on which tasks are chosen, as indicated in the first two columns):

| A |

B |

Optimization |

1

1

2

2 |

1

2

1

2 |

max p1A . e1A + p1B . e1B + m1 - 1/2(e1A - E0)2 - 1/2(e1B - E0)2

max p1A . e1A + p2B . e2B - 1/2(e1A - E0)2 - 1/2(e2B - E0)2

max p2A . e2A + p1B . e1B - 1/2(e2A - E0)2 - 1/2(e1B - E0)2

max p2A . e2A + p2B . e2B + m2 - 1/2(e2A - E0)2 - 1/2(e2B - E0)2 |

As can easily be seen, all four of these optimizations are completely separable into an optimization for unit A and one for unit B. Each of the smaller problems is of the form

max p1j • eej - 1/2 (e1j-E0)2 for i = 1,2 and j = A, B

with the solution

e1j* = p1j + E0 for i = 1,2 and j = A, B

The socially optimal effort choice for task 2, e2j*, will later be used to compute the degree of initiative. It is now straightforward to determine the optimized values and compare them in order to determine the optimal choice of tasks.

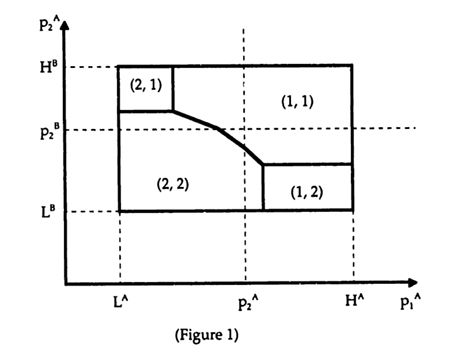

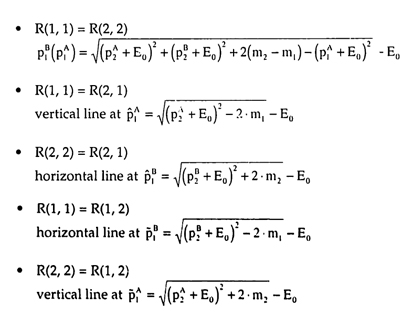

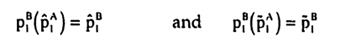

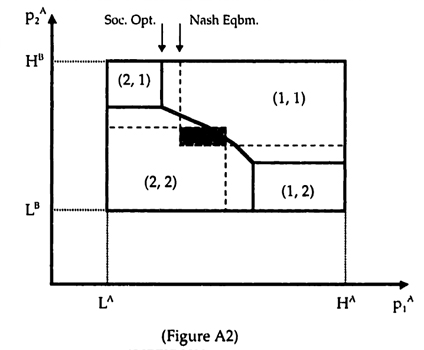

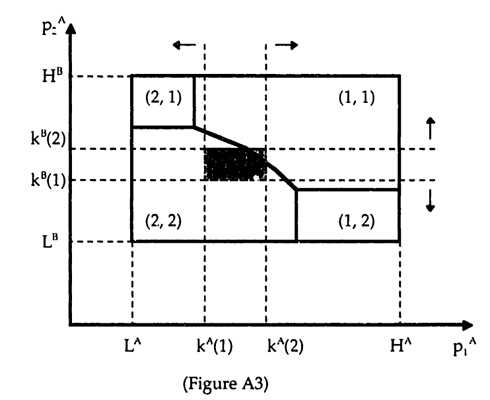

Given the assumptions about the structure of the uncertainty, there is a convenient way of graphing the resulting decision rule. For a given realization of m, the optimal choice of tasks can be graphed as a phase diagram in (p1A, p1B) space, since p2A, p2B, and m2 are fixed by assumption. The shape of the phase diagram is derived in Appendix A and shown in Figure 1.

The notation (A, B) indicates the tasks to which the units should optimally allocate their effort for each region of the phase diagram. It is important to note, that while the shape of the diagram is always the same, the exact size of the regions depends on the benefit from coordination. With m2 fixed by assumption, the size depends on the realization of m1 As is shown in Appendix Al, for m1 = m2, the curve separating the (1,1) and (2,2) regions passes right through the point (p2A, p2B). For m1 > m2, the curve passes below the point and for m1 < m2, the curve passes above it.

Using this phase diagram, the decision rule can be stated in the following way that facilitates the further analysis and clarifies its implications:

Networked IT Decision Rule

- Communicate all information. Then determine to which task to allocate effort by drawing the phase diagram for the realization of m{ and seeing in which region the point (p1A, p1B) falls.

- Choose the effort level for the correct task according to e1j* = p1j + E0 .

In this formulation, part 1 of the decision rule takes care of coordination, i.e. the choice of task. From the derivation it follows that 100% coordination is achieved. Part 2 of the decision rule determines initiative, i.e. the degree of effort. Again, it follows from the derivation that 100% initiative is achieved.

As will be seen shortly, in the team theory scenario, changes in the IT setting affect only part 1 of this decision rule and hence the degree of coordination. Part 2 remains the same and hence there is always 100% initiative. This separability is not really a result, since it was built in by assuming that the coordination benefit is independent of the level of effort. The reason for making this assumption is to clarify the source of the tradeoff between initiative and coordination: In the incentive scenario, separability breaks down, and a tradeoff between initiative and coordination exists.

Another implication of the decision rule for Networked IT is noteworthy. The central unit's only role is to communicate its observation of the benefits from coordination. The central unit is not required for decision making, since the required decision-making can take place in a decentralized fashion in the local units. Therefore if unit A were to observe ml and unit B were to "observe" m2 (it is assumed to be fixed), or the other way round, there would be no role at all for a central unit.

4.3.3 Centralized IT (Team Theory Scenario)

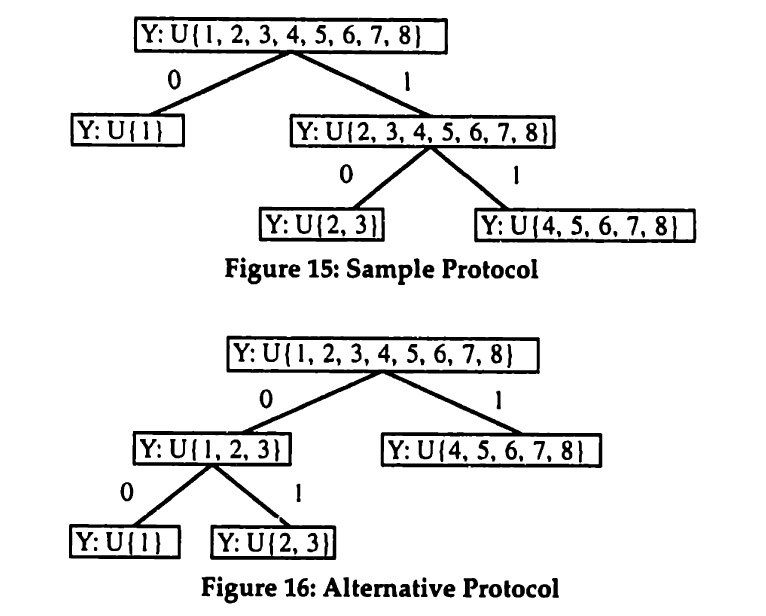

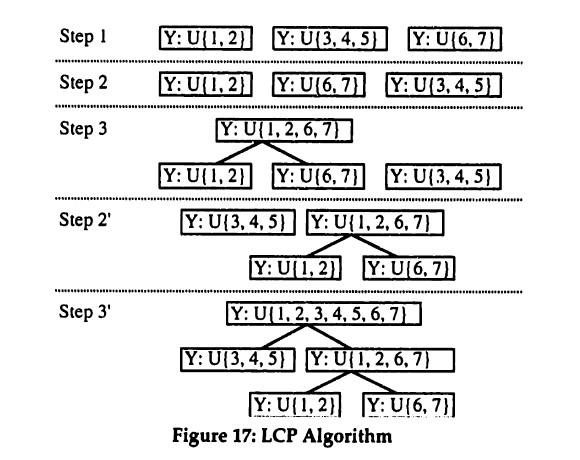

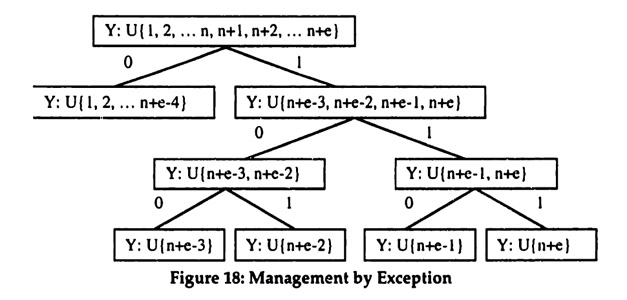

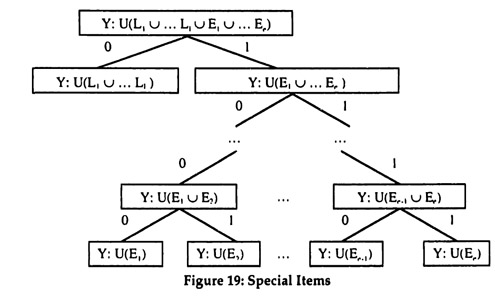

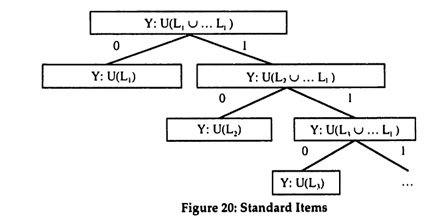

With Centralized IT, the central unit can obtain all information: it observes the realization m of the benefits from coordination and the local units A and B can communicate their respective benefits from initiative, pA and pB, to the center. The central unit can now draw the phase diagram and determine the optimal choice of tasks. It can then use the two single bits to communicate the task choice back to the units, e.g. bitj = 0 means "choose task 1" and bitj = 1 means "choose task 2" (for j = A, B). This is summarized in the following decision rule:

Centralized IT Decision Rule

- Communicate local observation to the center, which will send back the correct task.

- Choose the effort level for the correct task according to e1j* = p1j + E0.

In the team theory scenario, Centralized IT can thus achieve exactly the same outcome as Networked IT, i.e. 100% coordination and 100% initiative. Now, however, the central unit plays a crucial role, since it actually chooses the correct tasks. Once each local unit has received its central command, it can choose the optimal effort level, since this only requires it to know the benefit from initiative which it has observed locally. Again, the analysis would not change much if the benefits from coordination were also observed locally.

4.3.4 No IT (Team Theory Scenario)

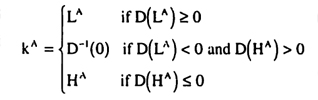

Without IT, the units must base their choice of tasks solely on their local observation of the benefits from initiative and their knowledge of the distribution of the benefits from coordination. In Appendix A2 it is shown that the following simple decision rule is optimal for this setting:

No IT Decision Rule

- If p1j is above the cutoff k1, choose task 1. If it is below the cutoff, choose task 2.

- Choose the effort level for the correct task according to e1j* = p1j + E0.

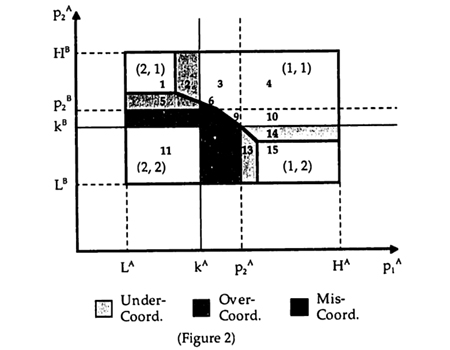

Without IT, it will—in general—no longer be possible to achieve perfect coordination. Consider the following phase diagram which was drawn for a particular (albeit unobserved) realization of m1

The thick lines in Figure 2 mark the areas for the optimal task choices, which are indicated in the four comers. The solid lines at kA and k" determine the four quadrants of actual task choices, whereas the dotted lines at p2A and p2B determine the four quadrants of locally optimal choices. The following table summarizes the choices for each area:

| Area |

Optimal |

Local |

Actual |

Coordination |

| 1 |

(2,1) |

(2,1) |

(2,1) |

none required |

| 2 |

(ID |

(2,1) |

(2,1) |

under |

| 3 |

(l,D |

(2,1) |

(1,1) |

optimal |

| 4 |

(l, l) |

(1,1) |

(1,D |

none required |

| 5 |

(2,2) |

(2,1) |

(2,1) |

under |

| 6 |

(2,2) |

(2,1) |

(1,1) |

mis |

| 7 |

(2,2) |

(2,2) |

(2,1) |

over |

| 8 |

(2,2) |

(2,2) |

(1,1) |

over |

| 9 |

(1,1) |

(2,2) |

(1,1) |

optimal |

| 10 |

(1,1) |

(1,2) |

(1,1) |

optimal |

| 11 |

(2,2) |

(2,2) |

(2,2) |

none required |

| 12 |

(2,2) |

(2,2) |

(1,2) |

over |

| 13 |

(2,2) |

(1,2) |

(1,2) |

under |

| 14 |

(1,1) |

(1,2) |

(1,2) |

under |

| 15 |

(1,2) |

(1,2) |

(1,2) |

none required |

As Figure 2 and the summary table show, there are areas in which coordination errors are made. In areas 2,5,13, and 14 coordination would be required but does not take place (under-coordination). In areas 7,8, and 12 coordination is not required but does take place (over-coordination). Finally, in area 6 coordination is required, but the wrong coordination occurs (mis-coordination). Hence the degree of coordination is strictly less than 100%.

The degree of coordination is lowest for "moderate" distributions of the benefits from coordination, i.e. when coordination matters but is not overwhelming. The closer the distribution is to one of two extreme cases, the higher the degree of coordination. The first extreme case occurs when there are no benefits from coordination (m1, = m2 = 0 always). The second extreme case occurs when the benefits from coordination are so overwhelming that a unique choice of tasks (e.g. both units always choosing task 1) is optimal. In both extreme cases 100% coordination can be achieved even without IT.

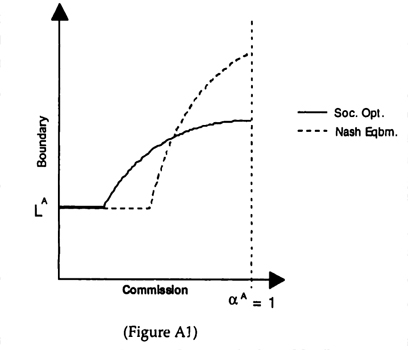

Without IT, there will be a significant difference if the benefits from coordination are observed by the local units. In particular, if unit A were to observe it would condition its cutoff on the observation, i.e. kA(m1). It is easily seen that the cutoff would have to be lower for higher realizations of m1.

4.3.5 Summary of Team Theory Scenario

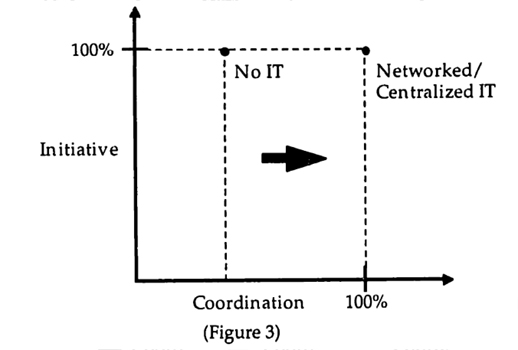

The analysis of the team theory scenario thus provides a number of important insights.

- The degree of initiative is 100% for all IT settings.

- In both the Networked IT and the Centralized IT setting, 100% coordination can be achieved for any distribution of the benefits from coordination.

- In the No IT setting, coordination degrades, with the degree of coordination being worst, when both initiative and coordination matter.

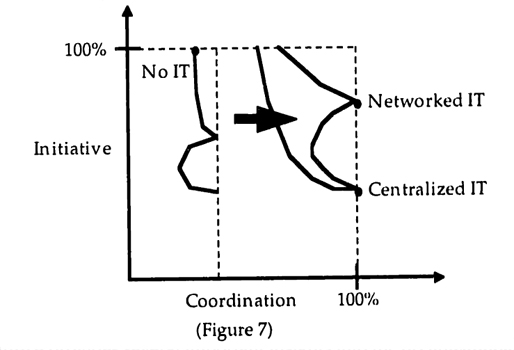

These insights can be summarized in the following diagram (Figure 3), which will later be used for comparisons to the incentive scenario.

Figure 3 assumes distributions of the parameters for which both the benefits from initiative and the benefits from coordination matter. The dots mark the maximum coordination that can be achieved for each of the IT settings. The dotted lines indicate that in each IT setting it is also possible to have less coordination and/or less initiative, if a non-optimal decision rule were used. For instance, in the No IT setting, if the cutoff in the decision rule is too low or too high there will be less coordination than can be achieved with the optimal cutoff.

In other words, the dotted line is the initiative - coordination frontier for an IT setting. More IT shifts the frontier to the right as indicated by the arrow. Given the definitions of the measures, more initiative and more coordination are desirable. Therefore given the rectangular shape of the frontiers, the uniquely optimal point is as indicated in the top right comer.

4.4 Incentive Scenario

4.4.1 Additional Assumptions

The team theory scenario is built on the premise that all agents will optimize the overall benefit rather than their individual benefits. In most organizational settings this is rather unrealistic. Introducing incentive issues affects the analysis in two major ways. First, agents will choose effort to maximize their own benefit given their incentive scheme. Second, agents will not necessarily truthfully reveal the information available to them but must be given incentives to do so.

The incentives will be based on two measures of the benefit, one for unit A and one for unit B, as follows:

RA = R1A + 0.5 - Rc = pA • eA + 0.5 • m - I(eA, eB)

RB = R1B + 0.5 • Rc = pB • eB + 0.5 • m - I(eA, eB)

It can easily be seen that the two measures sum to the total benefit. The measures reflect the idea that the benefit from coordination cannot be attributed to either unit and is therefore split between them. This could be the result of an allocation rule by the center or the outcome of a bargaining process between the two units. 20

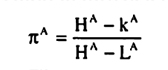

The analysis will be restricted to incentive schemes that are linear in these two measures. The "wages" for the two units thus take the following form:

wA = α0 + αA . RA + αB . RB

wB = β0 + βA . RA + βB . RB

The weights on the two measures will often be referred to as the "commission rates," They should be interpreted as a reduced form representation of a large number of incentive instruments, which include explicit commissions, but also include ownership, subjective performance measures and others.

20 If there were N units then this model would represent a coordination opportunity between any two of them. Alternatively, one could explore the effects of a situation where the coordination benefit is spread across many units.

Focusing on linear schemes is less restrictive than it may at first appear. The units can observe the realizations of at least some of the benefit parameters before making their choices. Therefore they can condition their level of effort on their observations. In such a case, non-linear schemes can result in large distortions, and it has been shown that linear schemes may be optimal (Holmstrom and Milgrom 1987).

The measures also intentionally do not include the cost of effort. If the cost were completely measurable, there would be no incentive problem. By setting the commissions to αA = αB = βA = βB =0.5, each unit would face exactly the same optimization problem as in the team theory scenario (scaled by a constant factor of 0.5, but that of course does not affect the choices). The assumption that cost cannot be measured is readily justifiable when the units are individuals since effort costs are subjective and therefore impossible to measure directly. In the case of businesses allocating resources, the assumption may appear less justified since one might argue that accounting cost could be used. This would, however, not be appropriate for two reasons. First, the units are likely to have many activities (aside from the ones modeled), and hence their accounting cost is subject to various manipulations, such as the allocation of overhead. Second, the relevant cost concept are opportunity costs, which may diverge widely from the recorded accounting cost even without any manipulation. Third, the cost may reflect additional personal costs or benefits to the managers of the businesses, which are difficult to measure.

4.4.2 Networked IT (Incentive Scenario)

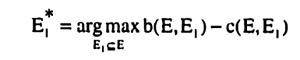

To analyze the Networked IT setting, it will first be assumed that both units truthfully report their information. Note that the revelation principle cannot be applied here since the contracts have been restricted to linear schemes. The remaining incentive problem is then the choice of effort. Consider Unit A's optimization problem, treating Unit B's effort choices as given

max wA - c(eA) =

= max α0 + αA • pA' • eA + (αA + αB) - 0.5 • m'. I(eA, eB) - 0.5(e1A + e2A - E0)2

By the same argument as in the team theory scenario, it is never optimal for Unit A (and hence also for Unit B) to split its effort between the two tasks. Therefore Unit A's optimizations can be reduced to two smaller problems of the form

max αA . piA . eiA- 1/2 (eiA - E0)2 for i=1,2

with the solutions

eiA* = αA . piA + E0 for i = 1,2

The problems and solutions are analogous for unit B.

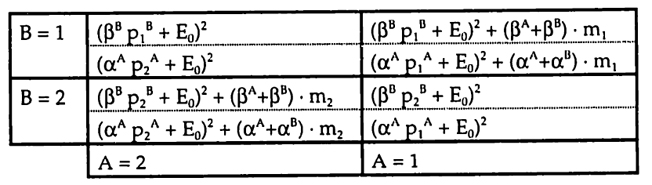

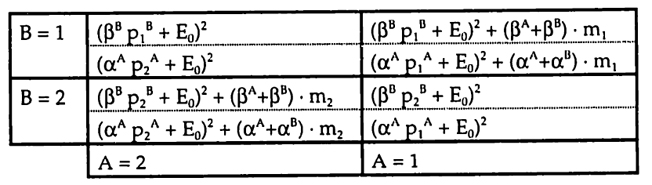

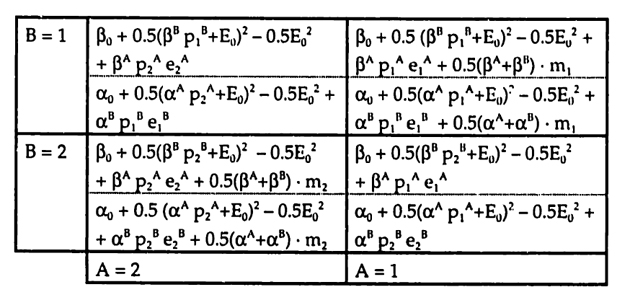

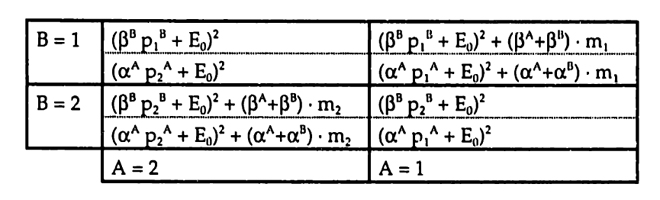

Given these solutions and the fact that neither unit will split its effort between the two tasks, it is straightforward to represent the game played between the two units in normal form (the relevant simplifications are shown in Appendix A3.1). 21

21 The somewhat unusual layout of the normal form was chosen to reflect the orientation of the phase diagram from the team theory scenario.

By inspecting the normal form, it is possible to discern the effects of changes in the various commission rates (see Appendix A3.2 for a more formal analysis, as well as Section 4.5 for a discussion of the effects of repetition):

- αA and βB

These commission rates affect all four payoffs for units A and B respectively. But their relative effect is stronger for payoff terms without coordination benefit (i.e. A = 1 / B = 2 and A = 2 / B = 1). Hence decreasing these rates makes the outcomes with coordination benefit more likely. This effect can be understood best by examining the special case of αA = βB = 0.

- αB and βA

These commission rates affect only the payoff terms with coordination benefit (i.e. A = 1 / B = 1 and A - 2 / B = 2). Hence increasing these rates makes the outcomes with coordination benefits more likely. This effect can be understood best by starting with αA = βB = 0.5 and αB = βA = - 0.5 and then increasing both αB = βA to 0 or even to positive 0.5.

The commission rates thus have different effects and can be used to guide the game played between the two units.

Two special cases, the individual entrepreneurs and the bureaucracy, will provide additional intuition.

- αA = βB = 1 and αB = βA = 0

These commission rates correspond to the case of individual entrepreneurs who own their respective businesses, They are residual claimants and thus obtain their full benefit, including a 50:50 split of the benefits from coordination as the result of bargaining between them. The first insight is that in this case the conditions for the choice of effort are the same as in the team theory scenario

eiA* = αA • piA + E0 = piA + E0

eiB* = βB • piB + E0 = piB + E0

Hence the degree of effort is the same, i.e. 100%. The second key insight is that the degree of coordination is lower than in the team theory scenario. The intuition is that coordination requires deviations from the locally optimal choice. Since the entrepreneurs obtain the full benefits from initiative but only half the benefits from coordination, they will deviate less frequently from the locally optimal choice than would be necessary for 100% coordination.22

- αA = αB βA = βB = 0

These commission rates correspond to the case of bureaucracy. The units have no ownership and do not receive any explicit incentive pay. The combination of the various incentive instruments results in wages that are completely flat, i.e. the individual benefits are fixed. By inspection of the optimization problems from above, it follows immediately that the effort level will drop to E0. The degree of effort is thus less than 100% as long as p2j > 0 for j = A, B

E0 / (p2j + E0) < 1 if p2j > 0

Bureaucracy, however, can achieve 100% coordination. The payoffs in the normal form are E02 everywhere and the units are thus indifferent between the choices of tasks.

The two special cases thus fit the intuition that was developed earlier for the tradeoff between initiative and coordination.

The question immediately arises whether it is possible to achieve both 100% initiative and 100% coordination as in the team theory scenario. One might consider giving both units the full benefit by setting αA = αB = 1 and similarly βA = βB = 1. The problem with this solution is that it uses up twice the benefit that is available. In other words, it does not meet the budget constraint of

WA + WB ≤ R

It is only possible to give the full marginal benefit to one of the two units, in which case the other unit will exert minimal effort E0. Any solution that meets the budget constraint cannot achieve both 100% initiative and 100% coordination. It is important to note that this is a result of using continuous schemes. Suppose that all the realizations of the parameters were known in advance.23 Then it would be possible to calculate the maximal benefit and to use a discontinuous incentive scheme which gives nothing to the units unless they achieve the maximal benefit. Such a scheme is known as "budget breaking" and can achieve 100% initiative (Holmstrom 1982). However, as was pointed out above, such discontinuous schemes tend to not work well when the realizations are observed before the actions are taken. The case study section and the section on managerial implications will relate this to the increased use of "stretch targets" and "mission statements" in hybrid organizations.

The budget constraint in combination with the linear incentives thus imposes a tradeoff between initiative and coordination. Characterizing this tradeoff turns out to be rather difficult. If one considers the (p1A, p1B) space, then changes in the commission rates have two effects. First, the rates affect the game played between the two units and thus determine which set of equilibria is possible for given realizations. Second, they also shift the boundaries of the phase diagram for the socially optimal choices, since the commission rates affect the level of effort, This effect is like chasing a moving target: changes to the commission rates influence both how the units will play and how they should play. Because of this complexity, the actual analysis is relegated to Appendix A3 2 and only the results are shown here.

22 This is shown formally in Appendix A3.2.

23 The following argument also works under uncertainty, but onJy to the extent that the agents do not observe the realizations before they take their actions.

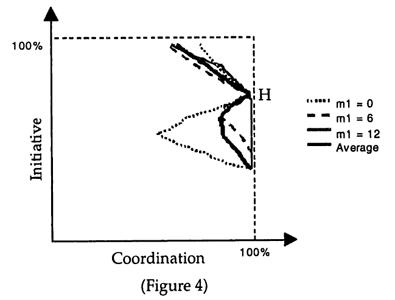

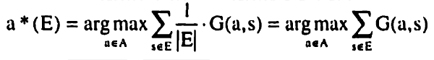

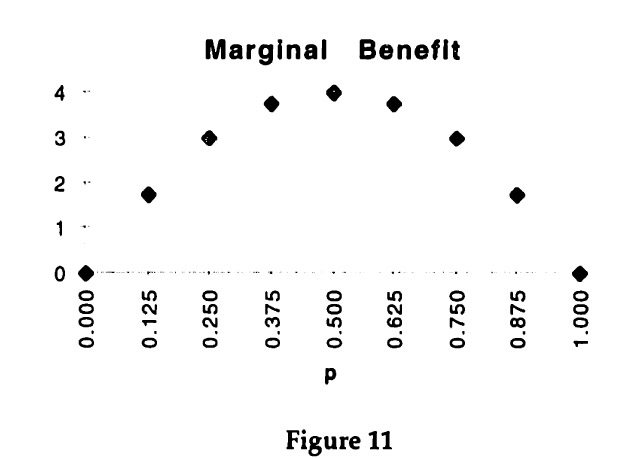

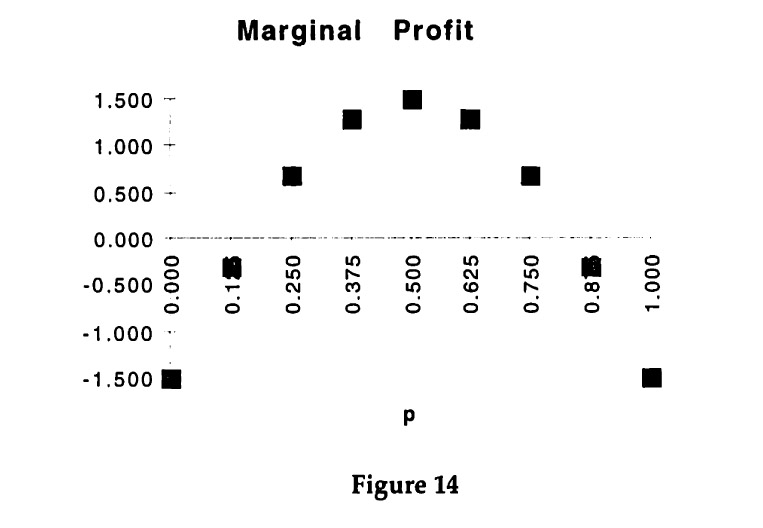

As in the team theory scenario, the approach for the analysis is to first take realizations of the benefits from coordination as given. The following figure shows the conditional initiative - coordination frontiers for selected realizations of m1 as colored lines (m2 = 6). Each frontier is the result of varying the commission rates. First αA and βB are chosen between 0 and 1, which fixes the degree of initiative. Then αB and βA are determined to maximize the degree of coordination. The actual frontier is found as an average across all realizations and is indicated as a solid black line.

Figure 4 provides some important insights into the organizational implications of Networked IT.

- First, for every realization it is possible to achieve close to 100% coordination for a level of initiative that is significantly above the bureaucratic minimum. This is a crucial observation since it helps explain the idea of hybrid organizations. The point where all curves reach almost 100% coordination will be referred to as the "hybrid point" (labeled H in Figure 4). The hybrid point is marked by balanced incentives: each unit's "wage" depends both on its own performance and on the performance of the other unit.

- Second, one can see clearly that as the degree of initiative rises above the hybrid point and approaches 100%, the degree of coordination degrades. The degradation is strongest when the coordination benefit is the same for both tasks (i.e. m1 = m2 = 6), as indicated by the green line. Interestingly, ordination also degrades for levels of initiative below the hybrid point. The error here is over-coordination, i.e. the units deviate too frequently from the local optimum.

- Third, it is no longer easily possible to determine the optimal point, as was the case in the team theory scenario. Most points below the hybrid point are clearly dominated since they involve both less coordination and less initiative. Above the hybrid point, however, there is an important tradeoff: more initiative implies less coordination. Interestingly, the hybrid point while not necessarily optimal, will be quite good for a large range of situations whereas 100% initiative does quite poorly for some realizations. This stability further increases the appeal of hybrid organizations. While they may not be optimal for any particular situation, they may be close to optimal for a large number of situations and are thus quite robust.

These insights will be explored in more detail below during the discussions of historical and case study evidence and of managerial implications.

The analysis up to this point has assumed that both units will truthfully report their observations. In Appendix A3.3 it is shown that this will not necessarily be the case. It turns out that emphasizing initiative increases the units' incentives to misrepresent their observations. The balanced incentives found in hybrid organizations thus have the added appeal that they encourage the truthful reporting of information. This factor will be even stronger when the units also observe the coordination benefits, i.e. when unit A reports the realization of m1. This suggests an additional insight for the organizational implications of Networked IT: there may be a role for a center in enforcing truthful reporting. Such enforcement may in fact be supported by Networked IT itself, for instance in the form of more detailed audit trails.

4.4.3 Centralized IT (Incentive Scenario)

As in the Networked IT setting, it will at first be assumed that the units truthfully report their observations.24 It is thus still possible for the central unit to determine the optimal choice of tasks. But the local units will no longer necessarily carry out the central unit's command. Instead, the units will again play a game. This game will now be analyzed. The analysis turns out to be surprisingly similar to the analysis of the No IT setting in the team theory scenario, as presented in Appendix A2.

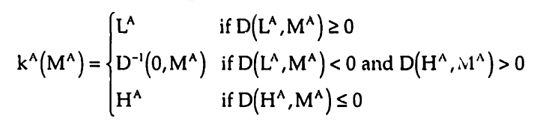

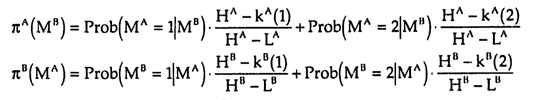

Let лB(MA) denote the probability that unit B will choose task 1, given that unit A has received the message MA = 1,2 from the central unit (note that the actual message is a single bit and hence either 0 or 1). Unit A will choose task 1, when its expecied benefit E[A1] is larger than the expected benefit from task 2. In Appendix A4.1 it is shown that the choices of effort for unit A are determined by

eiA* = αA . piA + E0

and that the difference in expected benefits is

D(p1A, MA) := E[A1] - E[A2] = (αA p1A + E0)2 - (αA p2A + E0)2 +

+(αA + αB) . {лB (MA) E[m1 | MA] - (1-лB(MA)) . m2}

The difference between expected benefits is continuous and strictly increasing in p1A. Hence there exists a cutoff kA(MA) such that for p1A ≥ kA(MA) the difference in expected benefits is non-negative, i.e. D(p1A, MA) ≥ 0, and hence unit A will choose task 1. The logic is analogous for unit B.

24 As before, it is not possible to apply the revelation principle.

Several insights can be gained by inspecting the determination of the cutoffs. As in the Networked IT setting, the commission rates αA and βB affect the importance of both the benefits from initiative and the benefits from coordination. The commission rates αB and βA on the other hand affect only the importance of the benefits from coordination. It follows immediately that more coordination can be achieved when the incentives are more balanced. The two special cases are also very similar to the Networked IT setting.

- αA = βB = 1 and αB = βA = 0

For individual entrepreneurs it is again true that the degree of initiative is 100%. This follows immediately from the equations for optimal effort. The degree of coordination, however, will generally be less than 100%. For any realization of p1j > kj(2) unit j = A, B will choose task 1 despite the central unit's message. Again the intuition is that since the entrepreneurs obtain the full benefits from initiative but only half the benefits from coordination, the units will deviate less frequently from the locally optimal choice than would be necessary for 100% coordination.

- αA = αB = βA = βB = 0

In the case of bureaucracy it again follows immediately that the effort level will drop to E0 and hence that the degree of effort is less than 100%. Bureaucracy, however, can achieve 100% coordination. The units are now indifferent between the two tasks and hence will follow the central unit's command.

The analysis of the intermediate cases requires a characterization of the equilibrium, which is cumbersome and therefore relegated to Appendix A4.2.

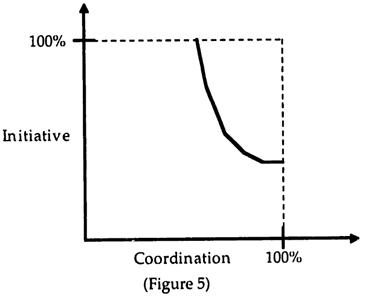

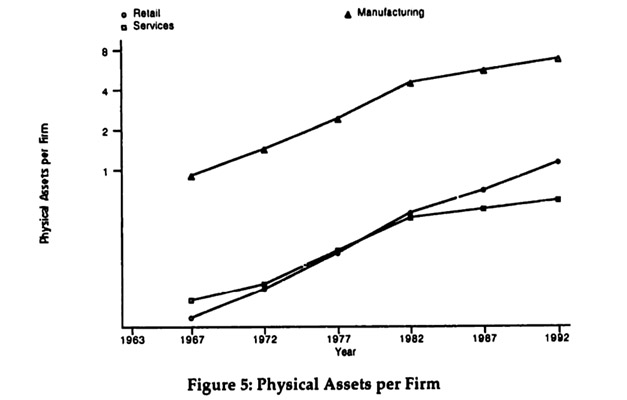

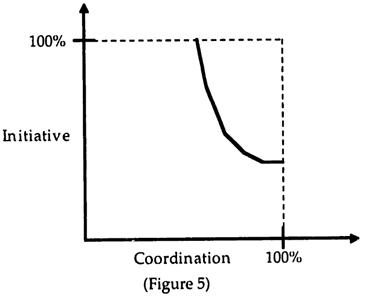

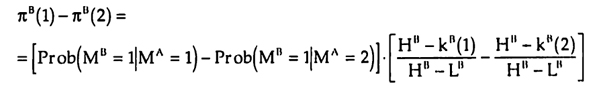

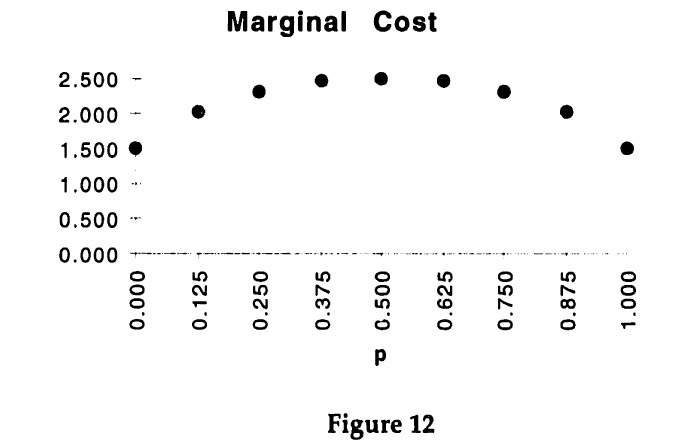

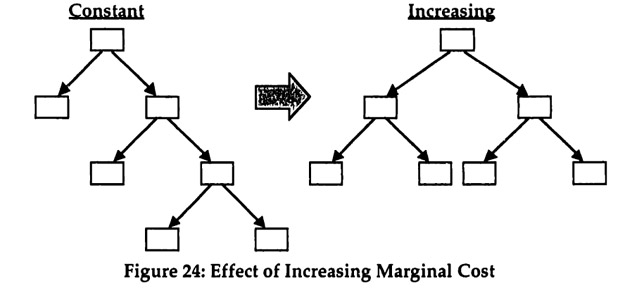

Based on the characterization of the equilibrium from Appendix A4.2 it is possible to give a rough sketch of the initiative - coordination frontier as shown in Figure 5. For high values of αA and βB, initiative is high, but the cutoffs kj(l) and kj(2) are close together resulting in under-coordination. As the incentives become more balanced, the cutoffs move further apart. While this improves coordination, it is not possible to achieve 100% coordination. The achievable degree of coordination depends on the distribution of m,. The stronger the variation in m1 the less coordination can be achieved since the cutoffs are fixed. As the commissions are lowered further, coordination continues to improve as initiative declines. As was shown in Appendix A4.2, the area in which perfect coordination can be achieved grows at an increasing rate, thus explaining the curvature of the frontier. Finally, by setting commissions to 0, it is possible to achieve 100% coordination with minimal initiative.

Figure 5 provides several important insights into the organizational implications of Centralized IT.

- First, the only way to achieve 100% coordination is by reducing initiative to the minimal level by setting all commissions to zero. This extreme point has previously been interpreted as a bureaucracy with flat wages and no ownership. Centralized IT thus favors a bureaucratic organization when coordination is important.

- Second, the only way to achieve 100% initiative is with substantially reduced coordination. This extreme point has previously been interpreted as individual entrepreneurs.

- Third, there is a strong tradeoff between initiative and coordination, i.e. as incentives are increased initiative improves, but coordination degrades. The curvature of the tradeoff suggests that the optimal point is likely to be one of the two extremes of either bureaucracy or individual entrepreneurs.

These insights will be explored in more detail below during the discussions of historical and case study evidence and of managerial implications.

Again, the assumption that the units will truthfully report their observations should be revisited. For the bureaucratic extreme this will obviously be true since the units are indifferent between all the outcomes. However, when initiative is emphasized and especially in the individual entrepreneurs case, the units have an incentive to misrepresent their information. For instance, by over-reporting the realization of p1A, unit A may get the central unit to send out Mj = 1 to both units when for the true realization the messages would have been Mj = 2. Unit A may benefit from this change in messages since it retains 100% of the benefit from its effort, but only 50% of the benefit from coordination. The problem will be especially pronounced when unit A is also in charge of reporting the realization of m1. In addition to processing the information and issuing the messages, the center may thus have to ensure truthful reporting. Note that all of these activities are costly for the center and hence for the individual entrepreneurs case, the central unit will likely have to charge a flat fee, i.e. α0, β0 < 0.

4.4.4 No IT (Incentive Scenario)

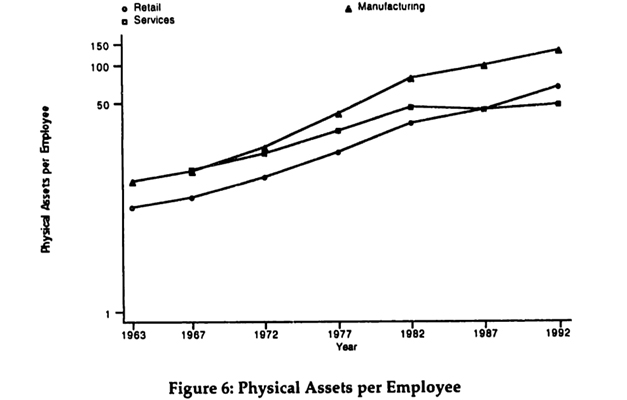

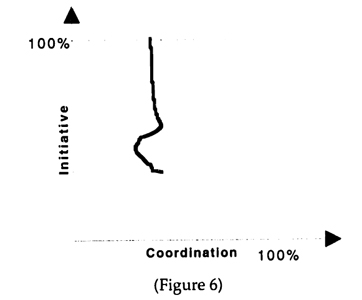

Without IT there is no reporting and hence no assumption about truthfulness needs to be mode. The units play a game based solely on their own observations. In the team theory scenario, the optimal decision rule consisted of a cutoff. The equilibrium of the game played by the units in the incentive scenario will involve a similar cutoff. This follows directly from the analysis of the Centralized IT setting given above. The strategies there were conditioned on a message from the central unit, which resulted in two different cutoffs. Therefore, without a message from the central unit there will be only a single cutoff. The analysis, which is carried out in Appendix A5, thus consists of comparing the socially optimal cutoff with the equilibrium cutoff. The resulting initiative -coordination tradeoff is depicted in Figure 6.

Figure 6 provides several important insights into the organizational implications of No IT.

- First, without IT it is impossible to achieve 100% coordination. The highest possible coordination is the same as in the team theory scenario, as indicated by the dashed vertical line. Interestingly, this can be achieved at a degree of initiative that is above the bureaucratic minimum.

- Second, as the degree of initiative approaches 100%, the degree of coordination declines, but only moderately. Even for 100% initiative the degree of coordination is close to the team theory scenario. Hence, while there is a tradeoff between initiative and coordination, it appears likely that the optimal point is at 100% initiative.

These insights will be explored in more detail below during the discussions of historical and case study evidence and of managerial implications.

4.4.5 Summary of Incentive Scenario

The analysis of the incentive scenario thus provides a number of important insights.

- As the degree of initiative rises towards 100% there is a tradeoff with coordination in all IT settings.

- IT expands the tradeoff frontier. With Centralized IT, it is possible to achieve both more coordination and more initiative than without IT, and Networked IT expands the frontier even further.

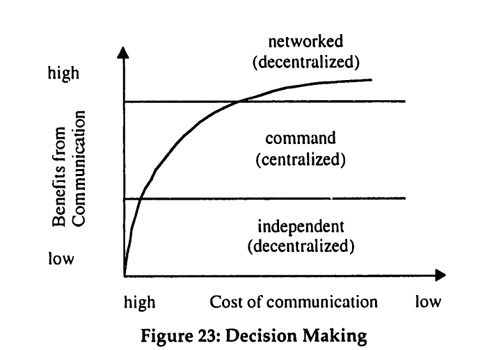

- The different IT settings favor different organizational forms. Without IT, the individual entrepreneur form is favored. Centralized IT favors a bureaucratic form of organization. Finally, Networked IT favors hybrid organizations.

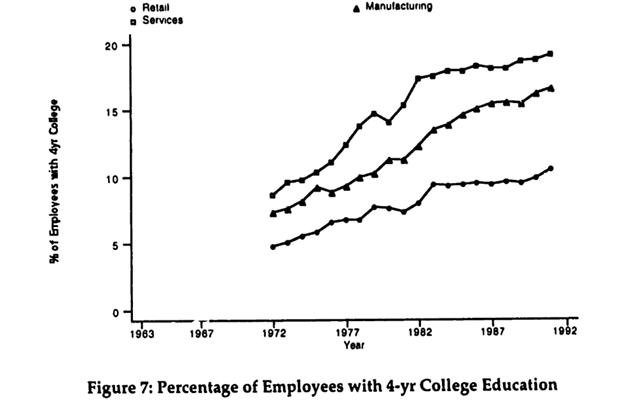

These insights can be summarized by combining the various tradeoff frontiers into a single diagram (Figure 7).

Figure 7 shows how the increased availability of IT expands the tradeoff frontier as indicated by the arrow. The dots indicate the points on the frontier that are favored by the different IT settings. The dashed lines indicate the team theory scenario as depicted in Figure 3. Given the assumptions of the model, the resulting historical evolution of organizations thus proceeds in three stages:

| Stage |

Use of IT Preferred |

Form of Organization |

| 1 |

No IT |

Individual Entrepreneurs |

| 2 |

Centralized IT |

Bureaucracy |

| 3 |

Networked IT |

Hybrid Organization |

This is clearly a highly reduced summary of the analysis. For instance, for certain settings the benefits from initiative may be so important that individual entrepreneurs are the preferred form of organization no matter what the degree of IT use. Nevertheless, the particular pattern of individual entrepreneurs to bureaucracy to hybrid organization should apply to a large number of settings. It has also been identified in work by Malone and Wyner (1996). Sections 5 and 6 present historical and case evidence to support the relevance of this pattern.

4.5 Repetition

The modeling has thus far ignored the issue of repetition. In many organizational settings of interest, the allocation of effort or resources that requires coordination will occur repeatedly. This raises the question how repetition will affect the results of the analysis. For the team theory scenario the answer is simple: repetition has no effect. First, there are no incentive considerations that could be affected. Second, by assumption, the random variables are uniformly distributed and independent across repetitions so that no additional information becomes available.

In the incentive scenario, repetition plays a more important role since it affect the strategies available to the units. Consider the case of networked IT. Suppose further that eventually it will always be revealed whether or not a unit truthfully reported the information that it observed. Then it might be possible to find an equilibrium that achieves both 100% initiative and 100% coordination. The threat of a "grim" strategy of always playing the local optimum, or even intentionally choosing the task that minimizes the other unit's payoff, may suffice to get both units to optimally coordinate and exert the optimal effort.

A detailed analysis of the repeated game is beyond the scope of the current paper but is an important issue for future work.25 It would, however, be surprising if the kind of equilibrium existed that overcomes the coordination-initiative trade-off. Most organizational settings are quite dynamic, thus limiting the frequency of repetition. Career and financial concerns often contribute to an emphasis on short-term results, thus effectively giving individuals and businesses high discount rates. In combination these factors significantly reduce the effectiveness of strategies that rely on future punishment to prevent current deviations. The main expected result from an analysis is thus that repetition will make it possible to sustain higher levels of both coordination and initiative than in the one-shot setting without eliminating the fundamental trade-off.

25 For an interesting related analysis see Baker, Gibbons, and Murphy (1995). |

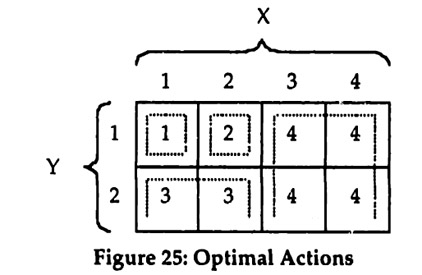

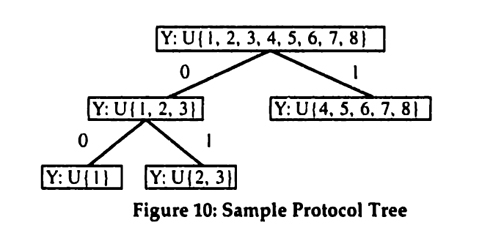

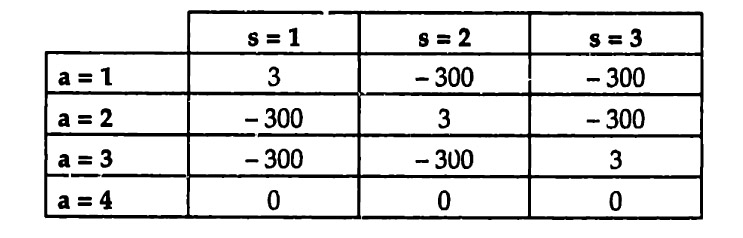

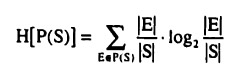

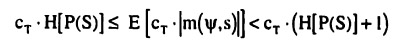

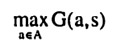

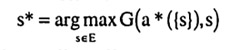

SY, where Sx = {1, 2,3,4} and SY = {1,2}. This two dimensional state space represents the information observed by the two team members X and Y. Let the set of actions be given by A = {1,2,3,4}. Finally, to keep the example really simple, assume that the benefit function is represented by the matrix in Figure 25, where the action shown for each cell has a benefit of 1 in that cell and all other actions have no benefit. It follows immediately that Figure 25 shows the optimal action for each possible state.

SY, where Sx = {1, 2,3,4} and SY = {1,2}. This two dimensional state space represents the information observed by the two team members X and Y. Let the set of actions be given by A = {1,2,3,4}. Finally, to keep the example really simple, assume that the benefit function is represented by the matrix in Figure 25, where the action shown for each cell has a benefit of 1 in that cell and all other actions have no benefit. It follows immediately that Figure 25 shows the optimal action for each possible state.